Policy Learning with Hypothesis Based Local Action Selection

2015

Conference Paper

am

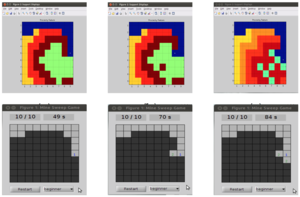

For robots to be able to manipulate in unknown and unstructured environments the robot should be capable of operating under partial observability of the environment. Object occlusions and unmodeled environments are some of the factors that result in partial observability. A common scenario where this is encountered is manipulation in clutter. In the case that the robot needs to locate an object of interest and manipulate it, it needs to perform a series of decluttering actions to accurately detect the object of interest. To perform such a series of actions, the robot also needs to account for the dynamics of objects in the environment and how they react to contact. This is a non trivial problem since one needs to reason not only about robot-object interactions but also object-object interactions in the presence of contact. In the example scenario of manipulation in clutter, the state vector would have to account for the pose of the object of interest and the structure of the surrounding environment. The process model would have to account for all the aforementioned robot-object, object-object interactions. The complexity of the process model grows exponentially as the number of objects in the scene increases. This is commonly the case in unstructured environments. Hence it is not reasonable to attempt to model all object-object and robot-object interactions explicitly. Under this setting we propose a hypothesis based action selection algorithm where we construct a hypothesis set of the possible poses of an object of interest given the current evidence in the scene and select actions based on our current set of hypothesis. This hypothesis set tends to represent the belief about the structure of the environment and the number of poses the object of interest can take. The agent's only stopping criterion is when the uncertainty regarding the pose of the object is fully resolved.

| Author(s): | Sankaran, B. and Bohg, J. and Ratliff, N. and Schaal, S. |

| Book Title: | Reinforcement Learning and Decision Making |

| Year: | 2015 |

| Department(s): | Autonomous Motion |

| Research Project(s): |

Interactive Perception

|

| Bibtex Type: | Conference Paper (inproceedings) |

| Paper Type: | Abstract |

| Links: |

Web

|

|

BibTex @inproceedings{1503,

title = {Policy Learning with Hypothesis Based Local Action Selection},

author = {Sankaran, B. and Bohg, J. and Ratliff, N. and Schaal, S.},

booktitle = {Reinforcement Learning and Decision Making},

year = {2015},

doi = {}

}

|

|