Learning to Explore in Motion and Interaction Tasks

2019

Conference Paper

mg

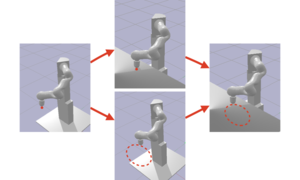

Model free reinforcement learning suffers from the high sampling complexity inherent to robotic manipulation or locomotion tasks. Most successful approaches typically use random sampling strategies which leads to slow policy convergence. In this paper we present a novel approach for efficient exploration that leverages previously learned tasks. We exploit the fact that the same system is used across many tasks and build a generative model for exploration based on data from previously solved tasks to improve learning new tasks. The approach also enables continuous learning of improved exploration strategies as novel tasks are learned. Extensive simulations on a robot manipulator performing a variety of motion and contact interaction tasks demonstrate the capabilities of the approach. In particular, our experiments suggest that the exploration strategy can more than double learning speed, especially when rewards are sparse. Moreover, the algorithm is robust to task variations and parameter tuning, making it beneficial for complex robotic problems.

| Author(s): | Miroslav Bogdanovic and Ludovic Righetti |

| Book Title: | Proceedings 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) |

| Pages: | 2686--2692 |

| Year: | 2019 |

| Month: | November |

| Day: | 3--8 |

| Publisher: | IEEE |

| Department(s): | Movement Generation and Control |

| Bibtex Type: | Conference Paper (conference) |

| Paper Type: | Conference |

| DOI: | 10.1109/IROS40897.2019.8968584 |

| Event Name: | 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS) |

| Event Place: | Macau, China |

| ISBN: | 978-1-7281-4004-9 |

| Note: | ISSN: 2153-0866 |

| State: | Published |

|

BibTex @conference{bogdanovic2019learning,

title = {Learning to Explore in Motion and Interaction Tasks},

author = {Bogdanovic, Miroslav and Righetti, Ludovic},

booktitle = {Proceedings 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS)},

pages = {2686--2692},

publisher = {IEEE},

month = nov,

year = {2019},

note = {ISSN: 2153-0866},

doi = {10.1109/IROS40897.2019.8968584},

month_numeric = {11}

}

|

|