Cross-Topic Distributional Semantic Representations Via Unsupervised Mappings

2019

Conference Paper

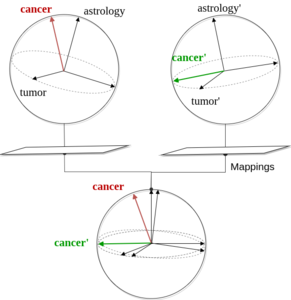

In traditional Distributional Semantic Models (DSMs) the multiple senses of a polysemous word are conflated into a single vector space representation. In this work, we propose a DSM that learns multiple distributional representations of a word based on different topics. First, a separate DSM is trained for each topic and then each of the topic-based DSMs is aligned to a common vector space. Our unsupervised mapping approach is motivated by the hypothesis that words preserving their relative distances in different topic semantic sub-spaces constitute robust semantic anchors that define the mappings between them. Aligned cross-topic representations achieve state-of-the-art results for the task of contextual word similarity. Furthermore, evaluation on NLP downstream tasks shows that multiple topic-based embeddings outperform single-prototype models.

| Author(s): | Eleftheria Briakou and Nikos Athanasiou and Alexandros Potamianos |

| Book Title: | Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL)) |

| Year: | 2019 |

| Month: | June |

| Bibtex Type: | Conference Paper (inproceedings) |

| Paper Type: | Conference |

| Event Place: | Minneapolis, USA |

| Attachments: |

pdf

|

|

BibTex @inproceedings{UTDSM:Naacl19,

title = {Cross-Topic Distributional Semantic Representations Via Unsupervised Mappings},

author = {Briakou, Eleftheria and Athanasiou, Nikos and Potamianos, Alexandros},

booktitle = {Annual Conference of the North American Chapter of the Association for Computational Linguistics (NAACL))},

month = jun,

year = {2019},

doi = {},

month_numeric = {6}

}

|

|